We all know the problem: the content is perfectly prepared, and everything is in place, but the moment

you hit the record button, your brain freezes, and what pops out of your mouth is a rain of

“ums”, “uhs”, and “mhs” that no listener would enjoy.

Cleaning up a record like that by manually cutting out every single filler word is a painstaking task.

So we heard your requests to automate the filler word removing task, started implementing it, and are now very happy to release our new Automatic Filler Cutter feature. See our Audio ...

Glitch While Streaming by

Glitch While Streaming by

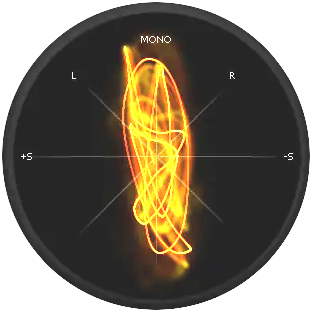

Phase correlation screenshot of

Phase correlation screenshot of

Photo by

Photo by  Resist the loudness target war!

(Photo by

Resist the loudness target war!

(Photo by